Section: New Software and Platforms

SUP

Presentation

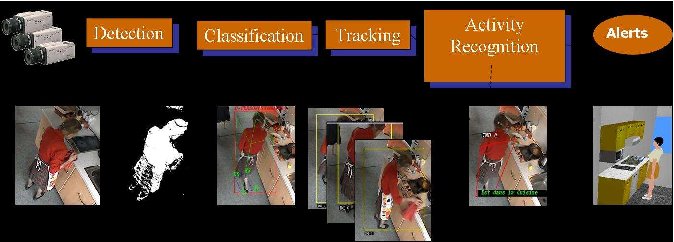

SUP is a Scene Understanding Software Platform (see Figure 5 ) written in C++ designed for analyzing video content . SUP is able to recognize events such as 'falling', 'walking' of a person. SUP divides the work-flow of a video processing into several separated modules, such as acquisition, segmentation, up to activity recognition. Each module has a specific interface, and different plugins (corresponding to algorithms) can be implemented for a same module. We can easily build new analyzing systems thanks to this set of plugins. The order we can use those plugins and their parameters can be changed at run time and the result visualized on a dedicated GUI. This platform has many more advantages such as easy serialization to save and replay a scene, portability to Mac, Windows or Linux, and easy deployment to quickly setup an experimentation anywhere. SUP takes different kinds of input: RGB camera, depth sensor for online processing; or image/video files for offline processing.

This generic architecture is designed to facilitate:

-

iharing of the algorithms among the Stars team. Currently, 15 plugins are available, covering the whole processing chain. Some plugins use the OpenCV library.

Goals of SUP are twofold:

-

From a video understanding point of view, to allow the Stars researchers sharing the implementation of their algorithms through this platform.

-

From a software engineering point of view, to integrate the results of the dynamic management of vision applications when applying to video analytic.

The plugins cover the following research topics:

-

algorithms : 2D/3D mobile object detection, camera calibration, reference image updating, 2D/3D mobile object classification, sensor fusion, 3D mobile object classification into physical objects (individual, group of individuals, crowd), posture detection, frame to frame tracking, long-term tracking of individuals, groups of people or crowd, global tacking, basic event detection (for example entering a zone, falling...), human behaviour recognition (for example vandalism, fighting,...) and event fusion; 2D & 3D visualisation of simulated temporal scenes and of real scene interpretation results; evaluation of object detection, tracking and event recognition; image acquisition (RGB and RGBD cameras) and storage; video processing supervision; data mining and knowledge discovery; image/video indexation and retrieval.

-

languages : scenario description, empty 3D scene model description, video processing and understanding operator description;

-

knowledge bases : scenario models and empty 3D scene models;

-

learning techniques for event detection and human behaviour recognition;

Improvements

Currently, the OpenCV library is fully integrated with SUP. OpenCV provides standardized data types, a lot of video analysis algorithms and an easy access to OpenNI sensors such as the Kinect or the ASUS Xtion PRO LIVE.

In order to supervise the GIT update progress of SUP, an evaluation script is launched automatically everyday. This script updates the latest version of SUP then compiles SUP core and SUP plugins. It executes the full processing chain (from image acquisition to activity recognition) on selected data-set samples. The obtained performance is compared with the one corresponding to the last version (i.e. day before). This script has the following objectives:

-

Check daily the status of SUP and detect the compilation bugs if any.

-

Supervise daily the SUP performance to detect any bugs leading to the decrease of SUP performance and efficiency.

The software is already widely disseminated among researchers, universities, and companies:

-

Nice University (Informatique Signaux et Systèmes de Sophia), University of Paris Est Créteil (UPEC - LISSI-EA 3956)

-

European partners: Lulea University of Technology, Dublin City University,...

-

Industrial partners: Toyota, LinkCareServices, Digital Barriers

Updates and presentations of our framework can be found on our team website https://team.inria.fr/stars/software . Detailed tips for users are given on our Wiki website http://wiki.inria.fr/stars and sources are hosted thanks to the Inria software developer team SED.